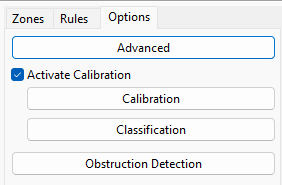

When selecting the options tab, we have the following screen:

When clicking on advanced options the following screen will appear:

Within this screen, it is possible to make several fine adjustments and configurations necessary for the operation of the engine:

•Tracker selection: In this menu it is possible to select the type of engine to be used. Having as an option Object Tracker (where the engine learns the scenario and analyzes the pixels to determine objects/background of the scene) and People Tracker (Deep Learning) / Object Tracker (Deep Learning) which are exclusive to professional analytics. These trackers will use deep learning technology to analyze the data and when starting the following message will be displayed on the Surveillance Client:

![]()

This indicates that the engine is initializing the analytical models compatible with the GPU used on the server (it is necessary to install the drivers CUDA in the server). This process can take up to 60 minutes per model and can vary depending on the GPU, once the initialization process has finished the message will disappear and will not be needed unless the GPU is changed or driver updated.

•Tracker Options: Tracker options will vary based on the type of tracker selected. Getting disabled when not applicable for the selected tracker. The options are:

oInitial movement required: Check this option so that the system waits for the initial movement to track objects. This option is only available for the Deep Learning Object Tracker and is necessary to recognize only moving objects because due to the nature of Deep Learning, it will recognize stationary objects (For example a parked car) and this option is necessary to recognize only the objects that have their first movement detected.

oMinimum object size for tracking (pixels): Minimum size, in pixels, for the system to consider the object.

oMaximum object size for tracking (pixels): Maximum size, in pixels, for the system to consider the object.

oMaximum time to track stationary objects (seconds): Time in seconds that the system will wait to consider an object as part of the environment.

oTime for a stationary object to be considered abandoned (seconds): Time in seconds that the system must wait to consider a stationary object as abandoned.

oMotion Sensitivity: Motion sensitivity setting so that the system considers it as an object rather than as part of the environment.

oUse Deep Learning Object Classifier: Check this option to have object classification derived from deep learning instead of object crawler

oCalibration Filter: The Calibration Filter is a tool that prevents objects that are too large or too small from being tracked and causing false alarms. This tool also improves situations where a large amount of motion is detected in the Object Tracker caused by lighting changes, or a Deep Learning Tracker recognizing very large or very small features as valid objects. An object is defined as large or small based on the metadata produced when Calibration is enabled. When the Calibration Filter is activated, an object is only valid when it meets all of the criteria below:

▪Object Height larger than 0.5m

▪Object Height shorter than 6m

▪Object Area larger than 0.5m²

▪Object Area smaller than 50m²

•Scene change detection: This option is used for the standard Object Tracker to recognize that a scene has changed significantly and must be relearned.

oDisabled: Scene change detection will be disabled.

oAutomatic: This is the most recommended option and will automatically recognize when a scene changes significantly.

oManual: Uses manually determined scene change parameters

▪Time limit (seconds): Time limit to trigger the learning of a new scene.

▪Area limit (%): What percentage of the image must be changed for the system to learn the scene again.

oAdaptive: The system will automatically adapt to changes in the scene. This option is recommended for use with thermal cameras.

•Tracked Objects Detection Point: The rules are activated from this point, which must have its position configured according to the scene.

oDefault: The default point will be centered below the object

oCentroid: The point will be the center of the object

oCentral Bottom: The point will be centered below the object

Centroid

Central Bottom

•Event redisplay time (seconds): This option allows the configuration of a global timer for retriggering events of the same object and rule.